Use VCE Exam Simulator to open VCE files

Get 100% Latest Microsoft Certified: Azure AI Engineer Associate Practice Tests Questions, Accurate & Verified Answers!

30 Days Free Updates, Instant Download!

Microsoft Certified: Azure AI Engineer Associate Certification Practice Test Questions, Microsoft Certified: Azure AI Engineer Associate Exam Dumps

ExamSnap provides Microsoft Certified: Azure AI Engineer Associate Certification Practice Test Questions and Answers, Video Training Course, Study Guide and 100% Latest Exam Dumps to help you Pass. The Microsoft Certified: Azure AI Engineer Associate Certification Exam Dumps & Practice Test Questions in the VCE format are verified by IT Trainers who have more than 15 year experience in their field. Additional materials include study guide and video training course designed by the ExamSnap experts. So if you want trusted Microsoft Certified: Azure AI Engineer Associate Exam Dumps & Practice Test Questions, then you have come to the right place Read More.

The Microsoft Certified: Azure AI Engineer Associate Certification stands as a distinguished professional credential that validates one’s expertise in developing, managing, and deploying artificial intelligence solutions on Microsoft Azure. As artificial intelligence continues to revolutionize industries, the demand for skilled professionals who can harness Azure’s AI capabilities has increased significantly. This certification focuses on practical knowledge, covering the design, implementation, and management of AI services integrated into business solutions.

The certification primarily revolves around Microsoft’s AI-102 exam, which tests the ability to design and implement Azure AI solutions. It provides professionals with an in-depth understanding of AI tools and cognitive services available within Azure, including natural language processing, computer vision, and conversational AI. The credential not only verifies technical proficiency but also demonstrates one’s ability to apply AI technology in real-world scenarios to optimize business operations.

In recent years, artificial intelligence has evolved from being a futuristic concept to a core component of everyday business applications. Organizations now rely on AI systems to analyze data, automate repetitive tasks, improve decision-making, and enhance user experiences. Microsoft Azure plays a crucial role in this transformation by providing a powerful suite of AI tools that allow developers and engineers to build intelligent cloud-based applications efficiently.

The Microsoft Certified: Azure AI Engineer Associate Certification enables professionals to master these technologies and utilize Azure services effectively. It helps bridge the gap between traditional software engineering and modern AI development by equipping individuals with the necessary skills to build scalable and secure AI-driven systems.

AI engineers are responsible for creating applications that can simulate human intelligence, analyze large data sets, and provide valuable insights. With Azure’s ecosystem, these tasks become easier to execute thanks to integrated services such as Azure Cognitive Services, Azure Machine Learning, and the Bot Framework. The certification ensures that professionals possess both theoretical knowledge and practical experience in implementing these technologies.

An Azure AI Engineer works at the intersection of software development, data science, and cloud computing. They design and deploy AI solutions that automate tasks, interpret data, and deliver meaningful insights. Their work often involves integrating AI capabilities into existing systems or creating entirely new intelligent applications that leverage Azure’s infrastructure.

The responsibilities of an AI engineer include designing scalable AI architectures, developing natural language understanding systems, implementing computer vision solutions, and building chatbots for enhanced customer interaction. Additionally, AI engineers must manage data security, ensure compliance with privacy standards, and optimize system performance.

Professionals in this field collaborate with data scientists, cloud architects, and software developers to ensure AI solutions are efficient and aligned with business objectives. The Microsoft Certified: Azure AI Engineer Associate Certification provides the technical foundation needed to perform these roles effectively, focusing on Azure’s AI tools and frameworks.

The certification requires passing Exam AI-102, titled Designing and Implementing a Microsoft Azure AI Solution. This exam evaluates the candidate’s ability to use Azure’s AI services to create, manage, and deploy solutions that solve real-world problems.

The key areas tested in the exam include planning AI solutions, implementing computer vision, natural language processing, and conversational AI, as well as monitoring and optimizing AI applications. Candidates must demonstrate practical skills in building AI-powered applications that integrate with other Azure services such as Azure Functions, Logic Apps, and Azure Storage.

The AI-102 exam contains a combination of multiple-choice questions, drag-and-drop tasks, and scenario-based case studies. These questions test both conceptual understanding and applied knowledge. Candidates are expected to have hands-on experience with Azure Cognitive Services, Azure Bot Service, and Azure Machine Learning before attempting the exam.

The Azure AI Engineer Associate Certification assesses various technical competencies essential for designing and managing AI applications. The core skills covered include solution design, natural language processing, computer vision, and conversational AI development.

One of the key objectives of the certification is to ensure candidates can design robust AI solutions using Azure resources. This includes identifying appropriate cognitive services, determining data flow, and selecting the right tools to meet specific business goals. Candidates learn to analyze requirements and map them to Azure AI services that best fulfill those needs.

Natural language processing (NLP) plays a vital role in modern AI systems. Through the certification, professionals gain expertise in using Azure AI Language services to analyze and understand textual data. They learn to perform tasks such as sentiment analysis, entity recognition, and text translation. This knowledge is particularly valuable for developing chatbots, virtual assistants, and other language-driven applications.

Computer vision enables machines to interpret visual information such as images and videos. The certification tests proficiency in using Azure’s Computer Vision, Custom Vision, and Face API to develop applications capable of recognizing objects, detecting faces, and classifying images. These solutions are used in diverse industries, from healthcare diagnostics to retail inventory management.

Conversational AI focuses on creating intelligent agents that interact with users through natural language. Azure Bot Service and Azure Cognitive Services are central to this domain. The certification covers how to build, train, and deploy chatbots that can understand user intent and provide relevant responses. Candidates also learn to integrate these bots with communication channels like Microsoft Teams and websites.

Security and governance are critical aspects of AI engineering. The certification ensures candidates understand how to monitor, secure, and optimize AI applications. This includes managing API keys, configuring authentication, and ensuring compliance with data privacy regulations. Azure provides built-in tools for monitoring performance metrics and cost optimization, which professionals must master as part of their learning journey.

Preparing for the Microsoft Certified: Azure AI Engineer Associate Certification requires a well-structured approach that combines theoretical knowledge with hands-on experience. Microsoft provides several official learning paths through Microsoft Learn, which cover each exam objective in detail.

The recommended preparatory course is AI-102T00: Designing and Implementing an Azure AI Solution. This instructor-led training program provides an in-depth understanding of AI solution development on Azure. It covers all key modules, including cognitive services, computer vision, and conversational AI, supported by real-world lab exercises.

Self-paced learners can also use online tutorials, documentation, and sandbox environments to practice deploying AI models. The hands-on aspect of preparation cannot be overstated; working directly with Azure services enhances understanding and builds confidence in managing complex scenarios.

Candidates should also take practice tests to evaluate their readiness. These mock exams simulate the structure and difficulty of the real AI-102 exam, helping candidates identify weak areas that need improvement. Reviewing case studies and Microsoft’s official documentation further strengthens comprehension of practical applications.

Earning the Azure AI Engineer Associate Certification offers numerous benefits, both professionally and personally. It validates an individual’s expertise in Azure’s AI technologies and positions them as a skilled professional in a growing field.

Holding a Microsoft certification establishes credibility in the global job market. Employers recognize the value of certified professionals who possess verifiable skills aligned with industry standards. The Azure AI Engineer Associate credential demonstrates the ability to design and deploy AI-driven solutions efficiently, making certified individuals more attractive to recruiters and clients.

The certification opens doors to various career paths, including roles such as AI Engineer, Machine Learning Developer, Cognitive Services Specialist, and Cloud Solutions Architect. These positions are in high demand across industries such as finance, healthcare, retail, and manufacturing.

Azure-certified AI professionals often earn higher salaries compared to their non-certified peers. The certification reflects practical expertise in one of the most sought-after technologies, which naturally leads to career growth and better job opportunities.

Through certification training, professionals gain hands-on experience with Azure AI tools. This includes developing custom AI models, integrating APIs, and deploying intelligent systems on cloud platforms. Such experience not only enhances technical skills but also builds the confidence to handle complex AI projects independently.

The technology landscape evolves constantly, and continuous learning is essential to remain relevant. The Azure AI Engineer certification ensures professionals are up to date with the latest AI advancements and Azure services. It encourages ongoing learning through Microsoft’s renewal process, which allows certified professionals to refresh their credentials periodically.

Azure’s AI capabilities have transformed numerous industries, demonstrating the real-world value of this certification. AI engineers certified in Azure can work on projects that range from customer support automation to predictive analytics and process optimization.

In healthcare, Azure AI is used for image-based diagnostics, patient data analysis, and predictive health monitoring. Financial institutions use AI to detect fraudulent transactions, assess credit risks, and automate compliance procedures. Retail companies rely on computer vision and natural language models to improve customer engagement, manage inventory, and personalize marketing strategies.

Manufacturing sectors use Azure AI to predict equipment failures and optimize production lines through intelligent automation. Each of these applications requires skilled professionals who can design and deploy AI systems efficiently, which is precisely what the certification prepares candidates to do.

While the certification offers immense rewards, it also comes with challenges. Azure’s AI services encompass a wide range of technologies, each requiring detailed understanding and practice. Beginners may initially struggle with concepts such as model training, API integration, and service deployment.

However, Microsoft provides extensive documentation, learning modules, and community forums that support learners throughout their journey. Engaging in hands-on practice remains the most effective method for mastering these skills. Candidates are encouraged to work on personal projects that replicate real-world problems, such as building chatbots or image recognition systems.

Understanding Python programming, REST APIs, and JSON data formats is also beneficial, as these technologies play a crucial role in Azure AI development. Familiarity with Azure DevOps, security protocols, and version control further enhances practical competence.

Microsoft ensures that all certifications remain relevant by requiring periodic renewal. The Azure AI Engineer Associate Certification can be renewed through Microsoft Learn at no cost. The renewal assessment focuses on updated content that reflects new features and changes within Azure’s AI ecosystem.

Continuous learning ensures that certified professionals stay ahead in a fast-evolving technological landscape. By staying updated with new Azure releases and participating in community discussions, AI engineers can maintain their expertise and continue to contribute effectively to AI innovation.

Microsoft certifications are recognized worldwide as benchmarks of professional competence. The Azure AI Engineer Associate credential, in particular, is highly valued by employers seeking professionals capable of integrating AI with business applications. Organizations across industries rely on Microsoft-certified talent to lead their AI transformation initiatives.

As more enterprises migrate to cloud-based systems, the demand for AI professionals skilled in Azure will continue to grow. Holding this certification not only demonstrates expertise in Microsoft’s AI technologies but also reflects a commitment to excellence and innovation.

Artificial intelligence is at the core of digital transformation. The future of AI engineering involves greater automation, predictive analytics, and integration of AI into everyday business operations. Professionals certified as Azure AI Engineers are well-positioned to take advantage of these developments.

As Microsoft continues to expand its AI capabilities, new opportunities will emerge in areas such as autonomous systems, voice recognition, and advanced computer vision. The certification ensures that professionals possess the foundational knowledge and practical experience to adapt to these changes.

By mastering Azure’s AI ecosystem, certified individuals can contribute to projects that drive innovation, enhance productivity, and redefine how organizations operate in the digital era.

The Microsoft Certified: Azure AI Engineer Associate Certification allows professionals to explore the depth of Azure’s artificial intelligence ecosystem. Microsoft Azure offers a broad range of AI services that can be integrated into enterprise systems, enabling automation, intelligent data analysis, and enhanced decision-making. Understanding these services and how they interconnect forms the foundation of the certification’s learning journey.

Azure’s AI tools include Azure Cognitive Services, Azure Machine Learning, Azure Bot Service, and Azure Cognitive Search. These services enable the creation of applications that understand, interpret, and act on human language, vision, and intent. Each component plays a specific role in helping developers design end-to-end AI solutions that solve real-world business challenges. The certification trains professionals to identify the right combination of services for a given task, ensuring scalability and performance optimization.

Azure Cognitive Services, for example, provides pre-built APIs for vision, speech, language, and decision-making tasks. Azure Machine Learning allows developers to train and deploy custom models. The Bot Service supports conversational interfaces, while Cognitive Search provides advanced search capabilities enhanced by AI. Through hands-on experience with these technologies, certified Azure AI Engineers gain the ability to craft solutions that integrate seamlessly into cloud environments.

One of the key areas covered in the Azure AI Engineer Associate Certification is Azure Cognitive Services. These services are divided into categories that address specific areas of artificial intelligence: vision, language, speech, and decision-making.

Azure Vision Services empower applications to analyze and interpret visual content. Using the Computer Vision API, developers can extract information from images, identify objects, detect text, and categorize content. The Custom Vision service allows users to build their own image classification models tailored to business-specific needs. This flexibility enables AI engineers to create models that recognize industry-specific patterns, such as identifying defective products on a manufacturing line or detecting certain types of medical imagery.

Face API is another significant component of the vision suite, offering capabilities for face detection, identification, and emotion recognition. This technology is widely used in security systems, access control, and customer engagement applications. The certification ensures that professionals can implement these tools effectively while maintaining compliance with ethical and privacy standards.

Language Services within Azure Cognitive Services enable applications to process and understand textual information. The Azure AI Language API supports functions such as sentiment analysis, key phrase extraction, and language translation. These tools help businesses analyze customer feedback, detect trends, and personalize user experiences.

Professionals preparing for the certification learn how to build applications that can interpret human language contextually. The service supports multiple languages, making it valuable for global enterprises. It also integrates with Azure OpenAI Service, which expands the range of natural language capabilities available for creating intelligent assistants and generative AI applications.

Speech recognition and synthesis are central to conversational AI. Azure’s Speech Services allow developers to convert spoken words into text and vice versa. It also supports speech translation, enabling real-time communication between people speaking different languages.

Through certification training, candidates gain practical experience in implementing speech recognition for call center automation, voice-enabled devices, and transcription services. They also learn to fine-tune speech models to recognize accents, domain-specific vocabulary, and background noise.

Decision-making capabilities are supported by Azure Personalizer and Anomaly Detector. These services allow developers to create adaptive systems that learn from user behavior. Personalizer enhances user experiences by delivering recommendations tailored to individual preferences, while Anomaly Detector identifies unusual patterns in data streams, which is useful for fraud detection and operational monitoring.

Azure Machine Learning (Azure ML) is a powerful platform that supports the development, training, and deployment of custom AI models. It provides a collaborative workspace where data scientists and AI engineers can manage datasets, build predictive models, and automate machine learning workflows.

The Azure AI Engineer Associate Certification emphasizes the ability to use Azure ML for model lifecycle management. Candidates learn how to select algorithms, preprocess data, and evaluate model performance. They also explore techniques for deploying models as REST APIs that can be integrated into web applications or business processes.

Azure ML supports both code-based and low-code development, allowing flexibility depending on the user’s expertise. The platform integrates with popular tools such as Python, TensorFlow, PyTorch, and scikit-learn. It also offers the Automated ML feature, which simplifies model selection and tuning for faster deployment.

Another important aspect of Azure ML is its ability to scale. With Azure’s infrastructure, machine learning models can handle massive datasets and high computational demands without compromising performance. This scalability makes it possible to deploy AI systems in industries like healthcare, finance, and retail where data volumes are significant.

Conversational AI represents one of the most practical applications of artificial intelligence, allowing machines to interact with users through natural dialogue. The Azure Bot Service provides a platform to create, test, and deploy chatbots that communicate intelligently with users.

The certification ensures that professionals understand how to design and implement chatbots using Azure Bot Framework and integrate them with Azure Cognitive Services. These chatbots can perform various functions, from answering customer queries to managing appointments and assisting with technical support.

The Azure Bot Service connects easily with channels like Microsoft Teams, Skype, and web applications, allowing businesses to provide consistent communication experiences across platforms. Azure’s QnA Maker and Language Understanding (LUIS) tools further enhance the bot’s capabilities by enabling it to understand user intent and respond appropriately.

As part of their training, candidates learn to secure, monitor, and optimize chatbots using Azure tools. They also gain insights into analytics that help measure user satisfaction and improve bot performance over time.

Designing AI solutions involves more than just building models. It requires understanding how different Azure services integrate to form a cohesive system. The certification trains professionals to architect AI solutions that are scalable, reliable, and maintainable.

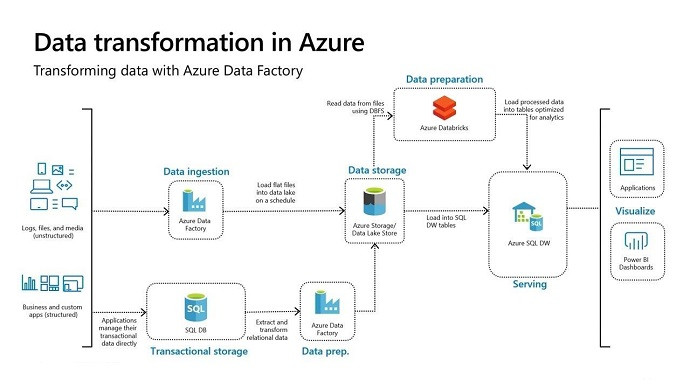

An effective AI architecture in Azure typically includes several layers: data ingestion, processing, AI model implementation, and user interface. Data ingestion may involve pulling data from Azure Data Lake or Cosmos DB, while processing may use Azure Synapse Analytics for transformation. AI models, developed through Azure Machine Learning or Cognitive Services, are then deployed using containers or REST APIs.

Security and compliance are also key elements of solution design. Azure provides role-based access control, encryption, and network security to protect sensitive data. Certified AI engineers must be capable of implementing these controls while ensuring smooth system integration.

Monitoring and maintenance are crucial aspects of AI architecture. Azure Monitor and Application Insights allow professionals to track model performance, detect anomalies, and optimize costs. These skills ensure the AI solutions remain efficient and reliable after deployment.

One of the strengths of the Microsoft Certified: Azure AI Engineer Associate Certification is its focus on practical applications. Throughout the learning journey, candidates engage in hands-on projects that simulate real-world business problems.

For instance, learners might create an image classification model using Custom Vision to identify product defects in a factory. Another project could involve building a chatbot that assists customers with order tracking using Azure Bot Service and Language Understanding.

These projects not only build technical confidence but also demonstrate how AI can be used to solve tangible business challenges. By integrating different Azure services, professionals develop the ability to create comprehensive solutions that improve productivity and efficiency.

Such practical experience is valuable when applying AI in industries that rely on data-driven decision-making. Whether it’s predicting customer behavior in retail or optimizing logistics in supply chain management, the certification equips professionals with the skills needed to deliver measurable business value.

Success in the AI-102 exam requires both theoretical understanding and applied knowledge. Microsoft recommends that candidates have at least six months of hands-on experience with Azure AI services before attempting the exam.

The preparation process involves several steps. First, candidates should thoroughly study the official Microsoft documentation, which outlines the services covered in the exam. Next, they should follow structured learning paths on Microsoft Learn that align with the exam’s objectives.

Hands-on labs are an essential part of preparation. They provide a controlled environment where learners can experiment with AI tools, test APIs, and deploy models. Practice tests are equally important, as they familiarize candidates with the question patterns and difficulty level.

The AI-102 exam is not just about memorization; it assesses the ability to apply knowledge to solve complex problems. Therefore, candidates must focus on understanding use cases and best practices for each Azure service. Reviewing case studies and working on sample projects can greatly enhance readiness.

Achieving the Azure AI Engineer Associate Certification opens doors to numerous career opportunities. Certified professionals can pursue roles such as AI Engineer, Machine Learning Developer, Cognitive Services Developer, and Cloud AI Consultant.

Industries across the world are adopting AI at an unprecedented pace. Businesses are using AI to automate processes, analyze large datasets, and create smarter applications. Azure-certified AI engineers play a vital role in this transformation by designing and implementing systems that leverage machine intelligence to drive growth.

The certification is particularly valuable for professionals who want to specialize in cloud-based AI solutions. With organizations increasingly migrating to cloud platforms, the demand for Azure AI engineers continues to rise. Professionals can find opportunities in sectors such as finance, healthcare, retail, and information technology.

Beyond passing the exam, professionals are encouraged to build a portfolio of AI projects that showcase their skills. A portfolio demonstrates practical experience, which is highly valued by employers.

Projects can include chatbot development, image recognition systems, predictive analytics dashboards, or custom language models. Publishing projects on platforms such as GitHub or contributing to open-source AI initiatives enhances visibility within the professional community.

Microsoft also offers community programs and technical certifications that complement the Azure AI Engineer Associate credential. Participating in AI hackathons, developer conferences, and online forums helps professionals stay connected with the evolving technology landscape.

Artificial intelligence evolves rapidly, and Microsoft continuously updates Azure services to incorporate the latest advancements. Certified professionals must engage in continuous learning to maintain their expertise.

Azure’s integration with emerging technologies such as generative AI, responsible AI frameworks, and edge computing opens new possibilities for innovation. Engineers who stay updated can leverage these advancements to build smarter and more efficient applications.

Microsoft’s learning ecosystem provides updated modules and free renewal assessments, ensuring that professionals remain certified and informed about new capabilities. Continuous learning is not only beneficial for career growth but also essential for delivering cutting-edge AI solutions in dynamic business environments.

The influence of Azure AI extends far beyond individual organizations. By enabling the development of intelligent systems, Microsoft’s AI technologies contribute to solving global challenges such as healthcare accessibility, climate monitoring, and education delivery.

For example, AI-driven predictive models are being used to anticipate disease outbreaks, optimize energy consumption, and improve agricultural yields. Certified Azure AI Engineers play a critical role in these initiatives by building scalable solutions that make a tangible difference.

By combining AI innovation with responsible implementation, Microsoft’s AI ecosystem fosters inclusive technology growth worldwide. The certification empowers professionals to be part of this transformation, helping them create AI solutions that serve humanity’s broader interests.

An Azure AI Engineer Associate is not just a developer or a data scientist. They serve as the bridge between business needs and intelligent automation, designing AI solutions that interact with data, understand natural language, interpret vision, and provide valuable insights. The role encompasses working closely with data engineers, software developers, and business stakeholders to ensure that AI solutions are practical, scalable, and aligned with organizational objectives.

AI engineers in this role are primarily responsible for using Azure Cognitive Services, Azure Machine Learning, and Knowledge Mining to create end-to-end AI applications. These professionals develop, manage, and deploy intelligent solutions that involve natural language processing, computer vision, conversational AI, and predictive modeling. Their tasks include selecting the right AI services, optimizing performance, integrating AI models into production systems, and ensuring compliance with security and ethical AI standards.

They are also expected to collaborate on data governance frameworks, evaluate model performance, and make iterative improvements. This involves not just technical proficiency but also an understanding of business logic, user experience, and responsible AI implementation. The certification ensures that professionals can effectively contribute to every stage of AI development, from conceptualization to deployment, maintenance, and continuous optimization.

A key skill validated by the Azure AI Engineer Associate certification is the ability to work effectively with Azure Cognitive Services. This suite of APIs provides pre-built AI functionalities that eliminate the need for complex model training. Candidates learn to use services like Text Analytics, Translator, Speech, Computer Vision, and Azure OpenAI Service to embed intelligence into existing systems and applications.

Cognitive Services allow developers to quickly add features such as language translation, text sentiment analysis, object detection, and speech recognition. Understanding how to configure, deploy, and manage these services securely is crucial. For example, engineers must know how to create cognitive service resources, manage keys and endpoints, and integrate APIs through REST or SDKs.

Additionally, AI engineers must be able to customize these services when out-of-the-box performance does not meet the project’s specific needs. They might retrain models or fine-tune parameters using customer data to improve accuracy and contextual relevance. This balance between leveraging pre-trained models and customizing them for domain-specific tasks is one of the skills that make certified professionals highly valuable to organizations embracing AI transformation.

Azure Machine Learning (Azure ML) is a core component of the certification syllabus. It empowers engineers to create, train, and deploy custom machine learning models using a scalable cloud-based environment. The certification tests one’s ability to manage the end-to-end machine learning lifecycle — from data ingestion and preprocessing to model training, validation, and operationalization.

AI engineers must understand the different environments available within Azure ML, such as Designer, Automated ML, and Notebooks. They must know when to use a code-first or low-code approach depending on project complexity. They also learn about the importance of reproducibility, model versioning, and continuous integration/continuous delivery (CI/CD) pipelines for AI solutions.

Deploying machine learning models as web services or batch endpoints is another critical skill. Engineers must configure compute targets, manage environments, and monitor deployed models using tools like Application Insights and Azure Monitor. Security practices, such as role-based access control, managed identities, and encryption, ensure that the AI solutions they build remain robust and compliant with enterprise policies.

Another critical element of the Azure AI Engineer Associate certification is knowledge mining, which focuses on extracting insights from large volumes of unstructured and semi-structured data. This involves using Azure Cognitive Search combined with Cognitive Services and custom AI skills to build pipelines that analyze and index data efficiently.

Knowledge mining is particularly valuable for organizations that deal with massive document repositories, customer feedback, or multimedia archives. Engineers learn to design skillsets that include natural language understanding, image extraction, entity recognition, and sentiment analysis. These pipelines turn raw content into searchable, structured knowledge, enabling intelligent discovery and decision-making.

In the certification context, knowledge mining solutions often involve creating and managing indexes, configuring enrichment pipelines, and integrating the output into dashboards or applications. Understanding how to optimize search relevance, manage cognitive skill performance, and secure data access are all part of the practical competencies evaluated during the exam.

The certification also emphasizes the integration of AI solutions into real-world business processes. AI engineers must be able to embed intelligent features into web applications, chatbots, and enterprise workflows using Azure Bot Service, Power Platform, or custom APIs.

For example, conversational AI solutions developed with Azure Bot Framework and Cognitive Services enable businesses to deliver personalized customer interactions. The integration of these bots with Microsoft Teams, Dynamics 365, or custom CRM systems can enhance productivity and engagement. Engineers must also understand deployment methods, scaling strategies, and telemetry collection to ensure seamless performance across platforms.

Another common integration scenario is embedding AI capabilities into Power BI dashboards or business intelligence solutions. This allows non-technical users to interact with predictive models or automated insights. The certification ensures that candidates can design such systems with maintainability and scalability in mind, enabling organizations to extract maximum value from their AI investments.

Responsible AI is a foundational concept covered in the Azure AI Engineer Associate certification. As AI systems increasingly influence decision-making, engineers must ensure their solutions are transparent, fair, and accountable. Microsoft’s Responsible AI principles guide professionals to consider the ethical implications of their designs and deployments.

Engineers are trained to identify and mitigate bias in datasets and models. They also learn to use tools like Fairlearn and InterpretML to assess fairness and explainability. Understanding privacy laws, such as GDPR, and implementing data protection measures within Azure is essential to maintaining trust and compliance.

The certification further highlights the importance of auditability and traceability in AI workflows. Engineers are expected to document model assumptions, data lineage, and decision logic to support ethical governance. These practices not only prevent unintended consequences but also help organizations maintain credibility and regulatory compliance.

Azure AI engineers rarely work in isolation. The certification underscores collaboration as a key element of success. Engineers collaborate with data scientists who design models, data engineers who prepare datasets, and application developers who integrate AI functionalities into business systems.

A certified AI engineer must understand each team’s responsibilities and how to align them toward a shared goal. For example, they should be able to transform a data scientist’s experimental model into a production-ready service or assist developers in optimizing API performance for real-time applications.

In addition, collaboration extends to managing Azure DevOps workflows. Engineers must know how to use version control, manage artifacts, and automate deployments using CI/CD pipelines. This cross-functional teamwork ensures that AI solutions are both innovative and operationally efficient.

The certification exam, AI-102: Designing and Implementing a Microsoft Azure AI Solution, evaluates an individual’s ability to translate business requirements into technical solutions using Azure AI services. It measures five major skill domains: planning and managing an Azure Cognitive Services solution, implementing computer vision solutions, implementing natural language processing, implementing conversational AI, and integrating AI into applications.

Candidates preparing for this certification should develop strong hands-on experience with Azure AI Studio, Azure Machine Learning, and Bot Framework Composer. It is also important to understand REST API calls, authentication methods, and SDK integration.

Practical preparation often includes building end-to-end AI projects that combine multiple services. For instance, a candidate might create a customer support chatbot with sentiment analysis and language translation features. Such projects not only demonstrate technical mastery but also enhance conceptual understanding by connecting theoretical learning to practical outcomes.

Azure AI Engineer Associates are in high demand across industries because of the versatility of AI technologies. In healthcare, they design systems that analyze patient data and assist in diagnostics. In retail, AI-powered recommendation systems enhance customer engagement. In manufacturing, computer vision models help detect product defects in real time, improving quality control.

The financial sector uses AI engineers to automate fraud detection, assess credit risks, and streamline compliance processes. Meanwhile, government and public sector organizations rely on AI for citizen engagement and document digitization.

The certification prepares engineers to adapt these AI use cases to meet unique industry challenges. They must not only apply technical knowledge but also understand business priorities, cost constraints, and regulatory frameworks. This ability to align AI capabilities with practical business goals is what distinguishes Azure AI Engineer Associates from traditional developers or data scientists.

Azure provides an extensive ecosystem that supports AI engineers throughout the solution lifecycle. Beyond Cognitive Services and Azure ML, engineers also leverage Azure Databricks for data processing, Synapse Analytics for data integration, and Power Automate for workflow orchestration.

The seamless interoperability among Azure tools allows AI engineers to design scalable, end-to-end pipelines. They can collect and clean data, train and deploy models, and monitor applications all within a single ecosystem. Moreover, Azure’s global infrastructure ensures reliability, low latency, and compliance with international data residency requirements.

Engineers are expected to understand how to optimize resource usage and cost through automation and governance policies. This requires familiarity with Azure Cost Management, resource tagging, and autoscaling mechanisms. Effective utilization of these features ensures that organizations achieve sustainable AI implementation without overspending on cloud resources.

The Microsoft Certified: Azure AI Engineer Associate credential is not just a one-time achievement but a foundation for continuous growth. AI is evolving rapidly, and professionals must stay updated with new services, frameworks, and best practices. Microsoft encourages this through its continuous certification model, requiring professionals to renew their certification annually through short assessments.

Certified AI engineers can advance to specialized roles such as AI Solution Architect, Applied Machine Learning Engineer, or AI Product Manager. They can also pursue higher-level certifications like Microsoft Certified: Azure Solutions Architect Expert or Data Scientist Associate. These pathways demonstrate Microsoft’s commitment to nurturing a complete ecosystem of skilled cloud and AI professionals.

Furthermore, the certification enhances one’s credibility in the global job market. Employers recognize it as a proof of technical competence, problem-solving ability, and adherence to Microsoft’s ethical AI standards. For professionals seeking career advancement, it serves as a strong differentiator in a competitive landscape where AI expertise is in growing demand.

The Microsoft Certified: Azure AI Engineer Associate certification prepares professionals not only to design and deploy intelligent systems but also to optimize, scale, and maintain AI solutions in complex enterprise environments. The evolution of AI technology requires engineers to move beyond simple deployments toward advanced integration strategies that involve automation, monitoring, and performance tuning. This phase of AI engineering focuses on bridging the gap between experimental models and production-grade solutions that meet business expectations for reliability, speed, and compliance.

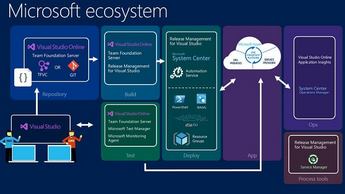

Azure’s platform offers a cohesive set of services that help engineers achieve this integration. Azure Machine Learning, Cognitive Services, Azure OpenAI, and Bot Framework collectively enable professionals to design AI-driven solutions that are both adaptive and scalable. Engineers must ensure that their designs integrate seamlessly with other components of the Azure ecosystem, such as Azure DevOps, Synapse Analytics, and Databricks. This alignment supports not only efficient workflow management but also helps achieve a balance between automation and human oversight.

Advanced AI implementation emphasizes lifecycle management. From data preparation to model deployment, every stage requires versioning, traceability, and governance. Engineers must employ automation pipelines that enable continuous integration and delivery, thereby ensuring consistent updates and smooth deployment cycles. This approach significantly reduces downtime and operational risks in production environments, establishing AI solutions as dependable assets in an organization’s digital strategy.

One of the essential skills for certified Azure AI engineers is optimizing AI solutions to achieve better accuracy, efficiency, and cost-effectiveness. Optimization extends beyond model performance and includes infrastructure management, data handling, and computational scaling.

For models built using Azure Machine Learning, optimization often begins with feature selection and algorithm tuning. Engineers can employ tools like HyperDrive for automated hyperparameter tuning, which helps identify the best model configuration with minimal manual intervention. This accelerates experimentation while reducing computational costs.

Performance monitoring is another vital component. Once deployed, models should be constantly evaluated using metrics such as latency, throughput, and accuracy. Azure Monitor and Application Insights provide the necessary telemetry to track these parameters and identify bottlenecks. Engineers can then fine-tune compute configurations, modify model architectures, or update input pipelines to maintain optimal performance levels.

Cost optimization is equally critical in cloud-based AI systems. Engineers must understand how to leverage Azure’s autoscaling capabilities, spot instances, and resource scheduling to minimize unnecessary spending. They should also be familiar with Azure Cost Management and Budget tools, which help track and control expenditures across AI workloads. This comprehensive approach to optimization ensures that AI systems remain sustainable, efficient, and financially viable over time.

As the field of artificial intelligence continues to evolve, Azure’s integration of OpenAI services has expanded the capabilities available to AI engineers. Through Azure OpenAI, professionals can harness the power of generative models to enhance communication, automate document analysis, and create intelligent virtual assistants.

Engineers are expected to understand how to integrate these advanced capabilities responsibly. They can use models such as GPT for natural language understanding and content generation, integrating them into chatbots, customer service systems, or analytics tools. For example, a virtual assistant in an enterprise setting might leverage Azure OpenAI to summarize meeting notes, draft customer emails, or provide intelligent responses to support queries.

However, using such generative models also introduces new challenges. Engineers must manage prompt design, input filtering, and response validation to ensure that the outputs remain accurate and contextually appropriate. Security and privacy become even more important as these systems process sensitive or proprietary information. By applying Responsible AI principles and continuous evaluation, engineers can ensure that these tools deliver business value while maintaining ethical standards.

Security and compliance are fundamental considerations in every AI implementation. Certified Azure AI Engineers must design solutions that safeguard data and ensure compliance with organizational, regional, and global regulations. Azure provides multiple layers of security tools and practices to achieve this objective.

Data security begins with encryption. All sensitive data, both in transit and at rest, must be encrypted using Azure Key Vault for secure key management. Engineers should also configure access control policies through Azure Active Directory and role-based access control (RBAC) to ensure that only authorized users can interact with critical AI assets.

Compliance management involves understanding frameworks such as GDPR, ISO 27001, and HIPAA, and implementing the necessary controls within Azure’s environment. Engineers can use Azure Policy to enforce organizational standards and assess compliance status across AI resources. Additionally, logging and auditing features allow for traceability, ensuring that all data handling and processing activities can be reviewed for compliance verification.

In AI projects, engineers must also protect against adversarial attacks and data poisoning. Implementing input validation, secure API endpoints, and model retraining protocols helps reduce vulnerabilities. Responsible governance of data access and model deployment processes contributes to building a secure and transparent AI ecosystem that aligns with both legal and ethical guidelines.

Automation plays a crucial role in modern AI solution deployment. Azure DevOps and MLOps practices allow AI engineers to automate model training, evaluation, and deployment pipelines. This ensures consistency, scalability, and reproducibility across environments.

In Azure, engineers can create automated workflows that trigger retraining when new data is available or performance metrics decline. These pipelines are built using Azure Pipelines, GitHub Actions, or Machine Learning Designer components. Automation reduces manual effort while maintaining continuous improvement of model performance.

MLOps also introduces governance to AI workflows. Version control for datasets, models, and code ensures traceability. Engineers can roll back to earlier versions when performance issues arise, maintaining a stable and controlled environment. Automated testing and validation within CI/CD pipelines help identify errors early, reducing the risk of deploying faulty models to production.

By integrating MLOps practices, organizations achieve faster AI innovation cycles while maintaining compliance and quality. This approach aligns perfectly with Microsoft’s vision for scalable, responsible, and efficient AI deployment across industries.

The rise of edge computing has expanded the scope of AI implementation in real-time environments. Azure AI services now integrate seamlessly with Azure IoT Edge, enabling AI models to operate close to where data is generated. This capability is particularly important for industries such as manufacturing, transportation, and healthcare, where immediate decision-making is critical.

Azure AI engineers can deploy trained models to edge devices, allowing predictions and inferences without continuous reliance on cloud connectivity. This reduces latency and enhances reliability, even in environments with limited internet access. Engineers must manage containerized deployments through Azure Container Instances or Kubernetes Service to ensure flexibility and scalability.

Edge AI also emphasizes resource optimization. Engineers must tailor models for smaller computational environments, often using model compression techniques or lightweight architectures. Azure Percept and other tools provide simplified deployment mechanisms for edge AI, helping organizations expand their intelligent systems into new operational territories while maintaining the same standards of accuracy and performance found in the cloud.

AI becomes most valuable when it integrates with business workflows and enhances productivity. Azure AI engineers often extend their solutions to Microsoft Power Platform, which includes Power Apps, Power Automate, and Power Virtual Agents. This combination empowers non-technical users to access AI-driven insights and automation without deep coding expertise.

For instance, Power Automate can use AI Builder models to automate invoice processing, customer feedback classification, or image recognition tasks. Engineers design and publish models that integrate directly into these low-code tools, creating seamless business automation pipelines. This democratization of AI fosters a culture of innovation, where employees can harness machine learning capabilities for their day-to-day operations.

In enterprise settings, integrating AI with Power Platform helps bridge the gap between IT and business teams. Engineers can create reusable AI components, reducing redundancy and accelerating solution development. The ability to monitor performance, retrain models, and update applications centrally ensures long-term efficiency and adaptability.

High-quality data remains the foundation of every AI project. Azure AI engineers must establish robust data pipelines that ensure continuous access to clean, relevant, and updated information. Azure Data Factory and Synapse Analytics serve as essential tools for orchestrating these data workflows.

Data engineers and AI professionals collaborate to define data ingestion, transformation, and validation processes. Engineers must ensure that the data pipelines can handle structured and unstructured data from multiple sources such as IoT devices, APIs, and databases. Implementing checkpoints and monitoring mechanisms prevents data inconsistencies and ensures reliability.

Moreover, AI engineers are responsible for data versioning and reproducibility, especially when retraining models. Using Azure Machine Learning Datasets, engineers can maintain version control and ensure consistent experiments. Integrating data lineage tracking helps identify potential sources of bias or errors, thereby maintaining transparency in model outcomes.

Efficient data management not only improves model accuracy but also reduces time spent in preprocessing stages. This allows teams to focus more on innovation and less on repetitive manual tasks.

Maintaining AI model performance is an ongoing process. Engineers must continuously evaluate deployed models to ensure they deliver accurate predictions as data patterns evolve. Azure provides monitoring tools that track metrics such as prediction drift, accuracy degradation, and latency fluctuations.

When performance declines, retraining becomes essential. Engineers must design automated retraining strategies that incorporate recent data to refresh model parameters. These retraining cycles can be scheduled periodically or triggered by specific performance thresholds. Azure ML Pipelines facilitate these updates with minimal disruption to ongoing operations.

Another aspect of maintenance is model explainability. Tools like SHAP and LIME can be integrated into Azure ML to interpret model behavior. Providing insights into why a model makes a particular prediction helps organizations build trust and comply with regulatory requirements. Engineers must also maintain proper documentation of these explainability metrics for audit purposes.

By adopting structured maintenance practices, AI engineers ensure that their solutions remain accurate, trustworthy, and aligned with evolving business needs.

As AI projects grow in complexity, effective collaboration becomes increasingly vital. Azure AI engineers must work closely with cross-functional teams that include data scientists, developers, project managers, and domain experts. Communication ensures that technical solutions align with business objectives and user expectations.

Microsoft Teams and Azure DevOps provide collaborative platforms where team members can share progress, track issues, and document milestones. Engineers should also implement standardized reporting formats for model performance, cost summaries, and data updates to maintain transparency across teams.

Cross-functional collaboration encourages knowledge sharing and fosters innovation. Engineers learn from other specialists, improving their understanding of how AI integrates into different domains such as healthcare, finance, or logistics. This synergy ultimately enhances the overall quality and relevance of the solutions developed.

As artificial intelligence becomes the cornerstone of digital transformation, the Microsoft Certified: Azure AI Engineer Associate credential continues to gain significance. This certification not only validates an individual’s ability to design and deploy intelligent solutions but also equips professionals with the insight to navigate the rapidly evolving AI landscape. Azure has positioned itself as a central platform for enterprises seeking to combine advanced machine learning, natural language understanding, and generative AI capabilities. In this final part, the focus turns toward the future of AI engineering, emerging innovations, and the expanding opportunities that await certified professionals in the years ahead.

The ecosystem surrounding Azure AI is continuously evolving. With the introduction of advanced services like Azure OpenAI, Cognitive Search enhancements, and improved integration with the Power Platform, engineers can build solutions that adapt intelligently to user behavior, data context, and environmental conditions. The role of an Azure AI Engineer is no longer limited to deploying static models; it now extends into designing adaptive systems that learn, reason, and interact with users in increasingly human-like ways. This evolution underscores the importance of continuous learning and adaptability for anyone pursuing or maintaining this certification.

As organizations strive to integrate AI across all operational levels, the demand for professionals capable of orchestrating complex systems on Azure continues to accelerate. From automating customer interactions to driving predictive analytics in manufacturing and healthcare, AI is redefining efficiency, scalability, and personalization. Certified Azure AI engineers sit at the forefront of this transformation, shaping how intelligent systems enhance productivity, security, and innovation globally.

The responsibilities of an Azure AI Engineer are expanding far beyond conventional boundaries. Traditionally, the role focused on designing machine learning models and deploying them using Azure Machine Learning and Cognitive Services. However, the modern AI engineer must now understand data architecture, model lifecycle management, ethical AI design, and the integration of generative models.

This evolution means engineers need to collaborate across broader interdisciplinary teams that include data scientists, software developers, security specialists, and compliance officers. They act as translators, bridging business objectives with technical feasibility. Their expertise ensures that AI applications remain efficient, interpretable, and aligned with ethical and regulatory frameworks.

The future Azure AI engineer will also take a leadership role in driving organizational AI maturity. They will guide decision-makers on choosing the right Azure tools, optimizing resource allocation, and establishing responsible AI governance frameworks. This combination of technical depth and strategic vision makes the certification not just a validation of skill but also a pathway to leadership in the digital enterprise era.

Generative AI represents a major turning point in artificial intelligence applications. Azure’s partnership with OpenAI has unlocked powerful capabilities for natural language understanding, image generation, and advanced problem-solving. Through Azure OpenAI, engineers gain access to models like GPT and DALL·E, which can generate text, summarize information, create visual content, and perform reasoning tasks that previously required human input.

Certified AI engineers are uniquely positioned to harness these models responsibly. They must understand prompt engineering, fine-tuning, and output control to ensure results are accurate, secure, and relevant to specific business needs. Whether it is automating report generation, assisting in document summarization, or enhancing virtual assistants, these generative models transform how enterprises handle data and communication.

Furthermore, integration of generative AI into Azure ecosystems enables new solutions that combine automation with creativity. Engineers can deploy these models alongside traditional AI services like Computer Vision, Translator, and Speech to build multimodal systems capable of understanding context and interacting naturally. As businesses adopt these technologies, engineers must ensure compliance with privacy standards, ethical use policies, and data protection guidelines that align with Microsoft’s Responsible AI principles.

With increasing automation and decision-making by AI systems, ethical governance has become a defining concern. Azure AI Engineer Associates play a critical role in ensuring that the systems they develop operate within ethical boundaries. Microsoft’s Responsible AI framework emphasizes transparency, fairness, privacy, reliability, and inclusiveness.

Engineers must implement these principles throughout the AI lifecycle. During data collection, they must assess potential bias and ensure diversity in datasets. In model design, they should apply fairness metrics and interpretability tools to evaluate outputs. Post-deployment, continuous monitoring helps detect deviations, enabling timely retraining or adjustments.

Tools like Fairlearn and InterpretML within Azure Machine Learning assist engineers in understanding how models behave and why certain predictions are made. Documentation and audit trails are equally essential, as they support accountability and trust among stakeholders. Certified professionals who master these governance practices contribute not only to technical excellence but also to societal trust in artificial intelligence systems.

As governments and industries strengthen AI regulations, engineers will increasingly collaborate with compliance teams to align projects with evolving standards. The ability to navigate this regulatory landscape while maintaining innovation will distinguish Azure AI professionals from general developers or data specialists.

The democratization of AI is one of the most promising trends shaping the future of Azure. Microsoft’s integration of AI into low-code and no-code environments like Power Automate, Power Apps, and Power Virtual Agents empowers business users to create intelligent applications without extensive programming experience.

Azure AI engineers play an instrumental role in this movement by developing reusable AI components and APIs that integrate seamlessly with these platforms. They design and publish pre-trained models for tasks such as document classification, object detection, or language translation, which can be easily consumed by non-technical users.

This democratization fosters a collaborative AI culture within organizations, where innovation is no longer confined to IT departments. Engineers provide the technical foundation, ensuring that the models used in these tools are accurate, secure, and scalable. As a result, businesses can accelerate digital transformation and respond rapidly to changing market conditions.

Moreover, this approach reduces the technical barrier to AI adoption. With intuitive interfaces and guided workflows, employees at all levels can participate in creating AI-driven automation, transforming business processes, and improving customer engagement. Azure AI engineers thus become enablers of enterprise-wide intelligence.

The impact of Azure AI extends across virtually every sector. In healthcare, AI engineers build systems that analyze medical imaging and assist in diagnostics. In finance, they develop models that detect fraudulent transactions and enhance risk assessment. Retail industries rely on AI to personalize shopping experiences and forecast demand, while logistics and manufacturing use predictive maintenance and computer vision for quality assurance.

Each industry presents unique challenges that require customized AI solutions. Engineers must tailor their designs to handle domain-specific data formats, regulatory constraints, and performance expectations. For example, in the energy sector, engineers use Azure Machine Learning to predict equipment failures and optimize resource consumption. In the education sector, AI systems support personalized learning paths based on student performance and engagement analytics.

By combining Cognitive Services, Machine Learning, and Power Platform integration, Azure AI engineers can build domain-adaptable frameworks. These modular architectures simplify deployment, monitoring, and scalability across diverse industries. The ability to apply general AI principles to specialized environments further reinforces the versatility and value of the certification.

Sustainability has emerged as a priority in technology design, and AI engineers have a significant role to play in advancing this mission. Microsoft’s commitment to becoming carbon negative drives the integration of sustainability principles into Azure services. Engineers must design AI systems that minimize energy consumption and promote resource optimization.

Efficient model training practices, such as distributed computing and low-power inference, contribute to reducing the environmental footprint of AI. Engineers can leverage Azure Machine Learning’s compute management capabilities to schedule workloads based on renewable energy availability. Additionally, AI can be used to analyze sustainability metrics, forecast energy consumption, and identify waste reduction opportunities.

For industries committed to environmental goals, AI-driven analytics can provide actionable insights. For instance, in manufacturing, computer vision can detect material inefficiencies, while in agriculture, AI models can monitor crop health using satellite imagery. Certified Azure AI professionals who understand the relationship between technology and sustainability will be increasingly valuable as organizations align their operations with environmental responsibility.

The world of AI evolves at a pace that requires continuous learning. Microsoft ensures that certified professionals stay current through ongoing learning paths and renewal assessments. The Azure AI Engineer Associate certification must be renewed periodically, encouraging professionals to keep pace with innovations in AI technologies and Azure’s expanding ecosystem.

Continuous learning extends beyond technical skills. Engineers must also enhance their understanding of emerging ethical, regulatory, and economic dimensions of AI. Engaging with the global AI community, participating in Microsoft Learn programs, and contributing to open-source projects are effective ways to maintain relevance.

As AI becomes more integrated with cloud computing, cybersecurity, and data science, engineers can pursue advanced certifications such as Azure Solutions Architect Expert or Data Scientist Associate. These credentials complement the Azure AI Engineer certification and open pathways to specialized or leadership roles. Lifelong learning ensures that professionals remain adaptive, forward-thinking, and capable of leading innovation in their respective fields.

Microsoft’s AI vision emphasizes empowerment—helping organizations and individuals achieve more through intelligent technology. This vision extends through Azure’s AI services, which democratize access to machine learning and cognitive computing on a global scale. Engineers certified under this framework contribute to this vision by enabling solutions that are inclusive, responsible, and transformative.

Azure AI initiatives are increasingly driving societal change. For example, AI for Accessibility uses machine learning to assist individuals with disabilities, while AI for Earth focuses on sustainability and environmental preservation. AI for Humanitarian Action supports crisis response and resource distribution in disaster-affected areas. Engineers trained in Azure technologies can contribute their expertise to these projects, aligning professional growth with meaningful global impact.

By applying their skills to socially beneficial initiatives, Azure AI engineers help bridge technological advancement with ethical purpose. This alignment of innovation and humanity ensures that AI becomes a tool for collective progress rather than exclusive advantage.

The next decade will witness the rise of more sophisticated and autonomous AI systems. Concepts like self-learning models, cognitive digital twins, and hybrid AI architectures are gaining momentum. Azure continues to evolve to support these innovations through advancements in model optimization, real-time analytics, and multi-agent collaboration systems.

Engineers must prepare for an era where AI becomes more context-aware and capable of reasoning beyond predefined algorithms. The integration of quantum computing, 5G connectivity, and edge intelligence will expand AI’s reach into previously inaccessible domains. Certified professionals will need to understand how to design systems that leverage these technologies efficiently.

Additionally, the growing interconnection between AI and cybersecurity will redefine protection strategies. AI models will not only detect threats but also adapt their defensive mechanisms in real time. Azure AI engineers will be at the forefront of developing such systems, combining predictive analytics with automation for proactive security solutions.

The ability to adapt, learn, and innovate will determine success in this new era. The Microsoft Certified: Azure AI Engineer Associate credential serves as both a foundation and a gateway for this continuous evolution, preparing professionals to lead the intelligent transformation shaping industries worldwide.

The Microsoft Certified: Azure AI Engineer Associate Certification stands as a powerful benchmark of professional credibility and technical expertise in the rapidly advancing world of artificial intelligence. It is more than just a validation of skills—it reflects a comprehensive understanding of how AI can be responsibly designed, deployed, and managed within modern cloud environments. Throughout the journey of earning this certification, professionals gain an in-depth understanding of natural language processing, computer vision, conversational AI, and cognitive services, all of which are transforming the way businesses operate and innovate.

Achieving this certification is not merely an academic accomplishment but a strategic investment in one’s professional growth. As artificial intelligence continues to shape industries ranging from finance to healthcare, manufacturing to education, the demand for skilled Azure AI engineers is set to grow exponentially. This certification ensures that professionals are prepared to meet that demand with confidence, backed by hands-on experience in building intelligent solutions using Microsoft’s AI tools and frameworks. It strengthens an individual’s ability to translate business challenges into AI-driven solutions that deliver measurable impact, enhance productivity, and foster innovation.

The certification also aligns with the evolving needs of organizations that are transitioning toward data-driven decision-making. Certified professionals become valuable contributors to enterprise AI strategies, capable of designing scalable architectures, ensuring compliance with data protection standards, and deploying machine learning models that align with real-world objectives. The skills developed through this certification go beyond technical proficiency—they cultivate analytical thinking, problem-solving ability, and the capacity to lead AI initiatives from concept to implementation.

Moreover, earning the Azure AI Engineer Associate credential opens the door to a wide range of career paths, from AI engineering and data science to machine learning operations and cloud architecture. It demonstrates to employers a commitment to excellence, adaptability to emerging technologies, and mastery of Microsoft’s AI ecosystem. In a competitive job market, this credential sets professionals apart as trusted experts capable of building intelligent applications that improve user experiences and streamline business processes.

The certification journey also encourages continuous learning and engagement with the broader AI community. Microsoft’s ecosystem evolves rapidly, and certified professionals benefit from ongoing access to learning resources, updated tools, and opportunities for collaboration. This continuous development ensures that Azure AI Engineers remain at the forefront of innovation, adapting to new AI models, ethical standards, and deployment methodologies.

Ultimately, the Microsoft Certified: Azure AI Engineer Associate Certification is not just about mastering technology—it is about driving meaningful transformation. It empowers professionals to design solutions that are ethical, efficient, and sustainable, contributing to a future where artificial intelligence augments human potential rather than replacing it. The certification reflects a deep commitment to shaping the future of intelligent computing, where AI and cloud technology converge to redefine how businesses operate and how people interact with technology.

By completing this certification, individuals become part of a global network of AI professionals dedicated to advancing the capabilities of intelligent systems. It reinforces the importance of lifelong learning, collaboration, and responsible AI deployment. For organizations, hiring Azure AI-certified professionals translates into a competitive advantage—one rooted in innovation, precision, and trust.

In essence, the Microsoft Certified: Azure AI Engineer Associate Certification embodies the future of AI leadership. It bridges the gap between theoretical knowledge and real-world implementation, preparing professionals to lead AI transformation across industries. It is a symbol of readiness for the digital era, a recognition of skill and passion, and a stepping stone toward becoming a key player in the global AI revolution.

Study with ExamSnap to prepare for Microsoft Certified: Azure AI Engineer Associate Practice Test Questions and Answers, Study Guide, and a comprehensive Video Training Course. Powered by the popular VCE format, Microsoft Certified: Azure AI Engineer Associate Certification Exam Dumps compiled by the industry experts to make sure that you get verified answers. Our Product team ensures that our exams provide Microsoft Certified: Azure AI Engineer Associate Practice Test Questions & Exam Dumps that are up-to-date.

Microsoft Training Courses

SPECIAL OFFER: GET 10% OFF

This is ONE TIME OFFER

A confirmation link will be sent to this email address to verify your login. *We value your privacy. We will not rent or sell your email address.

Download Free Demo of VCE Exam Simulator

Experience Avanset VCE Exam Simulator for yourself.

Simply submit your e-mail address below to get started with our interactive software demo of your free trial.